Motivation for Adaptive Step Sizes

- Instead of a fixed global \( \eta \), use an adaptive learning rate for each parameter that depends on the history of gradients.

- Parameters that have large accumulated gradient magnitude should get smaller steps (they've been changing a lot), whereas parameters with small or infrequent gradients can have larger relative steps.

- This is especially useful for sparse features: Rarely active features accumulate little gradient, so their learning rate remains comparatively high, ensuring they are not neglected

- Conversely, frequently active features accumulate large gradient sums, and their learning rate automatically decreases, preventing too-large updates

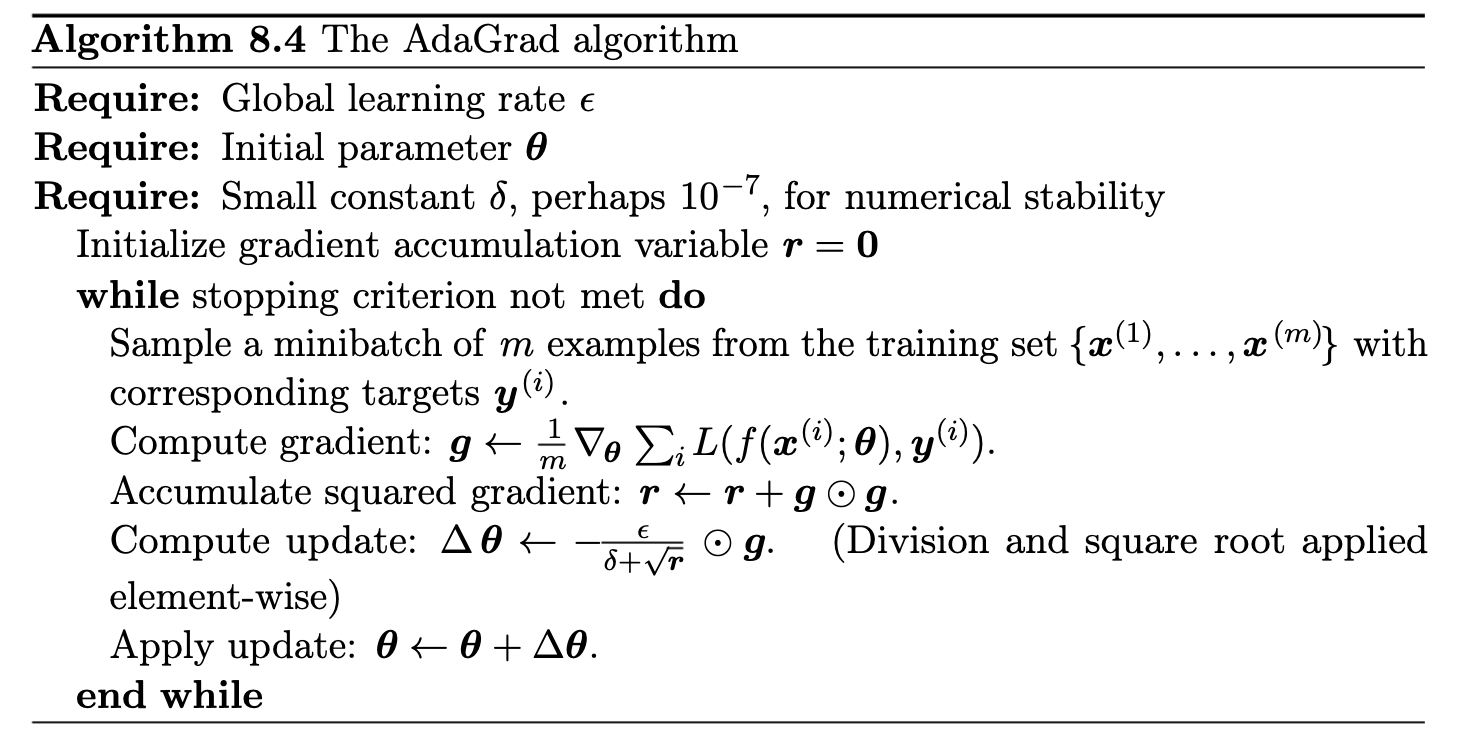

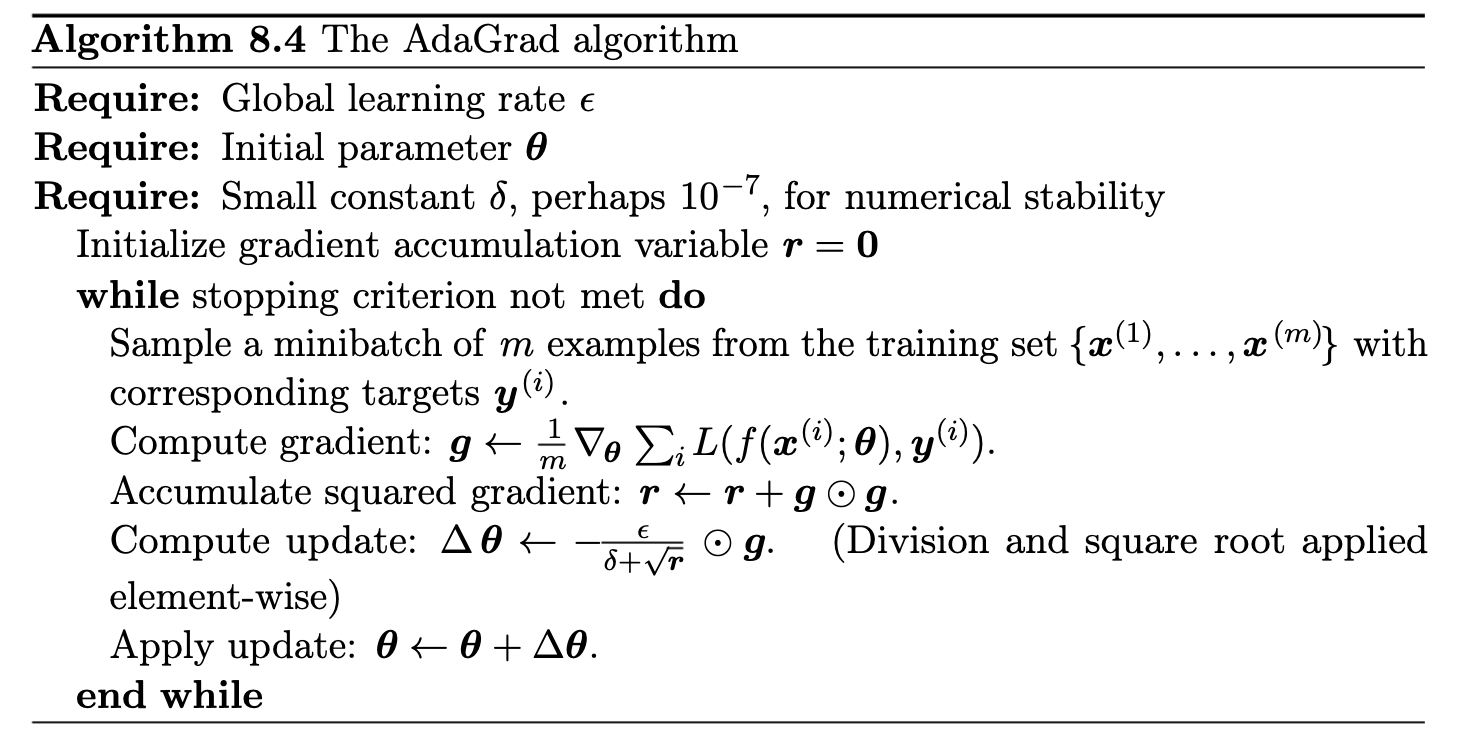

- Several algorithms implement this idea (AdaGrad, RMSProp, AdaDelta, Adam, etc.). We will derive **AdaGrad**, one of the first adaptive methods.