RMSProp: Adaptive Learning Rates

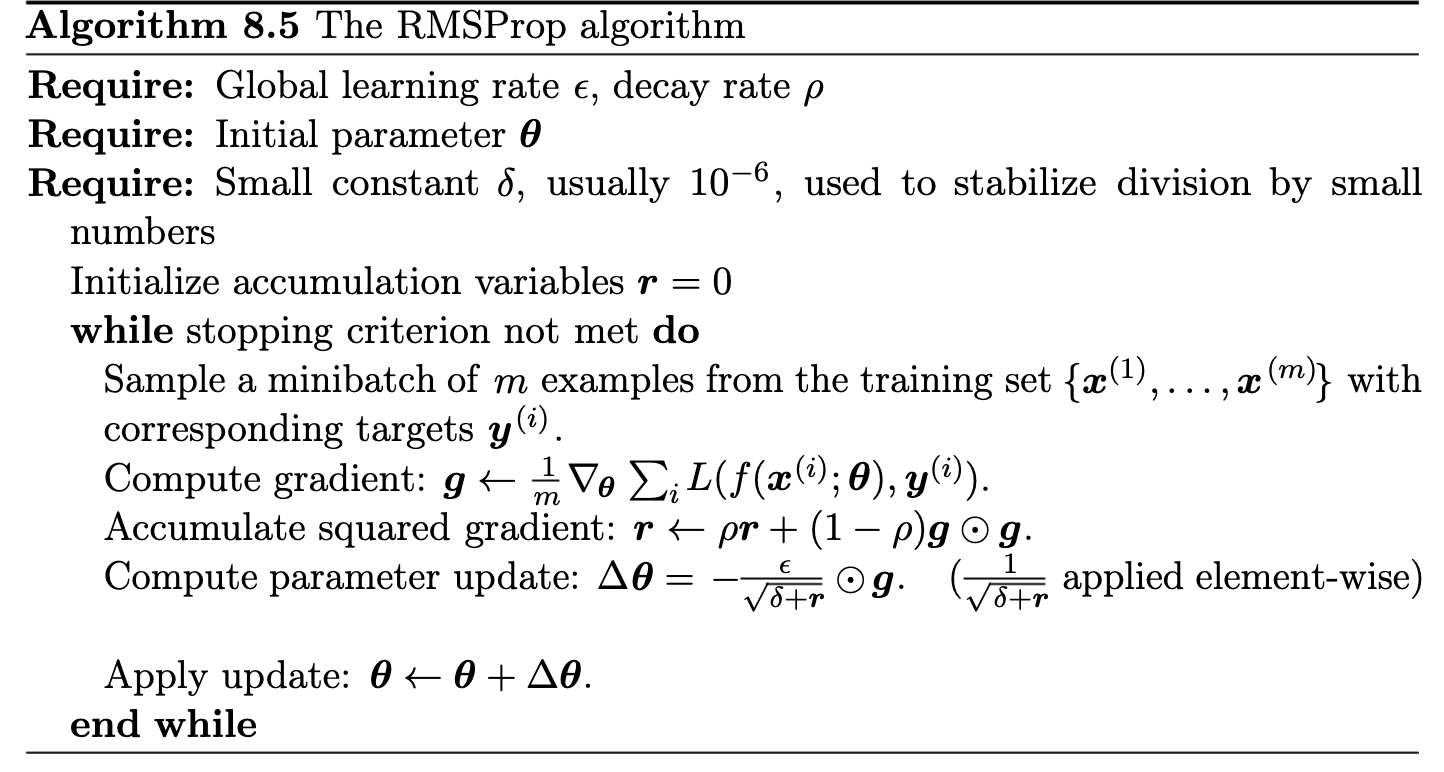

Addresses AdaGrad’s diminishing learning rate issue. Uses a decaying average of squared gradients (instead of a cumulative sum):

$$ v_t = \rho v_{t-1} + (1-\rho)(\nabla C(\theta_t))^2, $$with \( \rho \) typically \( 0.9 \) (or \( 0.99 \)).

- Update: \( \theta_{t+1} = \theta_t - \frac{\eta}{\sqrt{v_t + \epsilon}} \nabla C(\theta_t) \).

- Recent gradients have more weight, so \( v_t \) adapts to the current landscape.

- Avoids AdaGrad’s “infinite memory” problem – learning rate does not continuously decay to zero.

RMSProp was first proposed in lecture notes by Geoff Hinton, 2012 – unpublished.)

RMSProp algorithm, taken from Goodfellow et al